The latest wave of GenML tools is truly remarkable. I believe all creative work is going to be impacted, including product management. But in what way? One answer is already on offer. In recent months Twitter and LinkedIn have been flooded with “definitive” lists of ChatGPT prompts designed to produce product artifacts: Objectives and Key Results, user stories, market size assessments, strategic models, user interview scripts… You can find hundreds of example prompts for any conceivable model and framework. The results are often impressive. The bot is able to generate complete and convincing artifacts, which you can further improve on by tweaking the prompts. Sounds almost too good to be true.

Maybe, but I feel we should proceed with great caution. The ease of producing artifacts may come with some serious tradeoffs.

To understand why, let’s look at an example.

The Elusive Big Idea

A few years ago I consulted a small company developing AdTech products for social media. The company had a number of existing SaaS products, but none had found product/market fit and usage was very low. The problem wasn’t hard to spot. The founders kept launching projects around promising ideas without much validation. In practice developing these products took much longer than planned, yet market demand was far lower than expected (the classic planning fallacy).

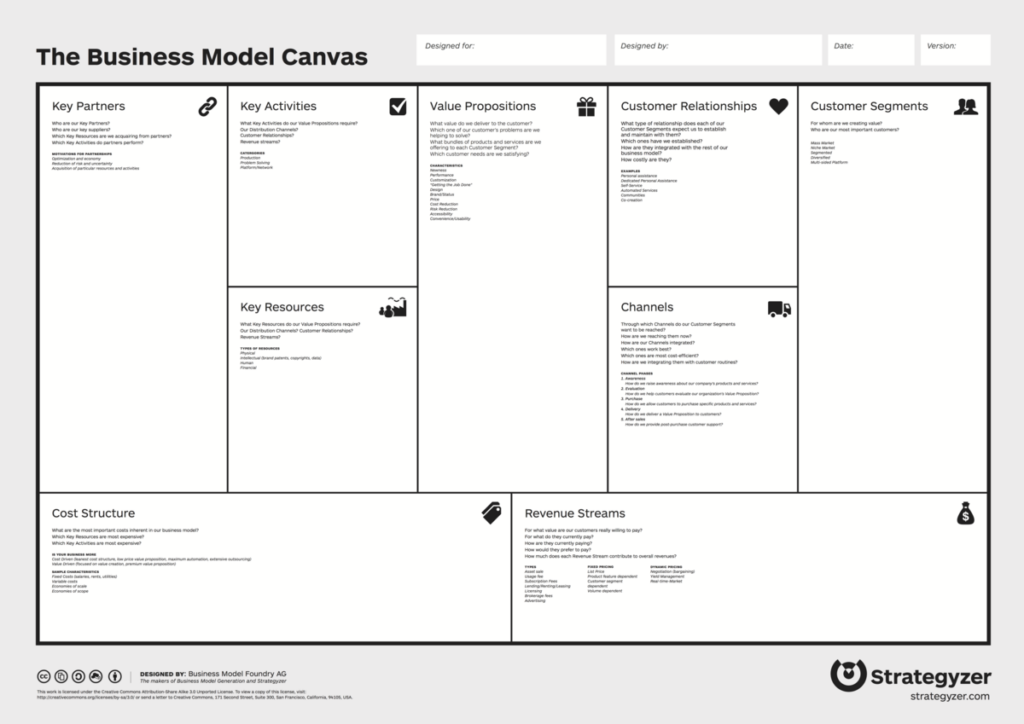

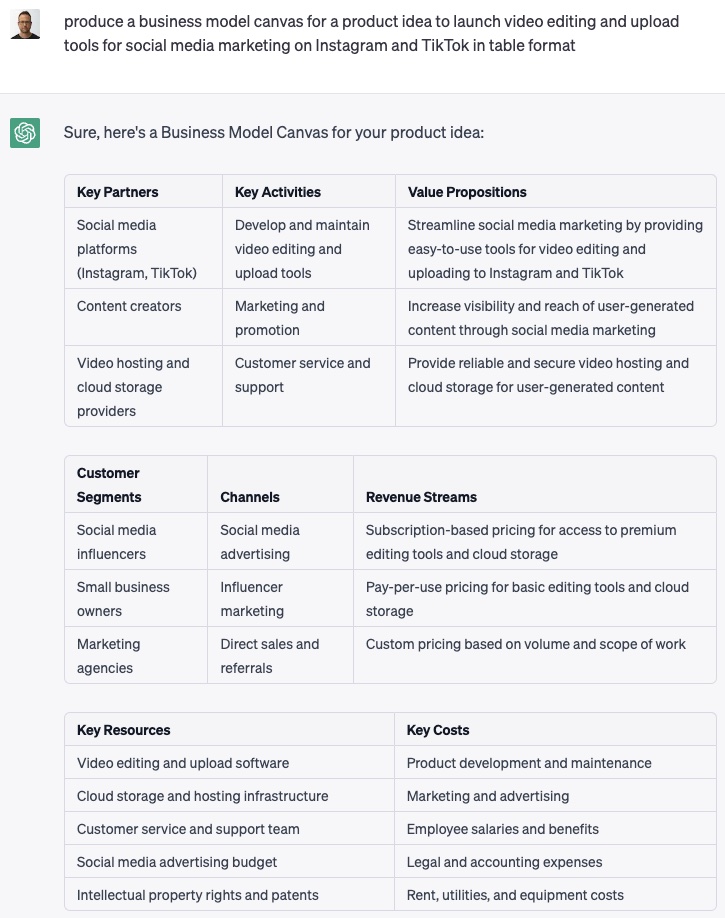

To help, I introduced the product team to Strategizer’s Business Model Canvas (BMC) — a powerful tool for assessing new product ideas.

Business Model Canvas | source: Strategyzer

The next time the CEO came up with a new must-have idea, one of the product managers created a draft BMC within a couple of days and took it to management review. Looking at the BMC brought the real potential and costs of the idea into focus. The conclusion was that the idea wasn’t quite as strong as first thought and likely would never justify its cost. The management team decided to drop the idea, and everyone, including the CEO, was happy with this decision.

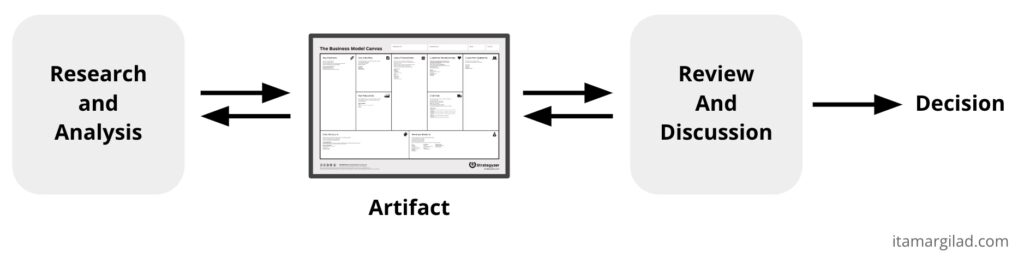

Let’s take a look at what happened here:

- The Business Model Canvas presented a model — a series of questions to answer: Who is this product targeted at? What is the key value proposition? What resources will we need to obtain to offer this service? How many paying customers will we need to break even?

- To answer these questions the product manager had to conduct research, analysis, and to produce some estimates. For example, he had to consider which types of ad agencies had a strong need for this product. While the answers were mostly guesswork, these were smaller, and arguably better guesses compared to the big looming question “is this a good idea?”.

- The PM produced the first version of the BMC (with some parts marked as To Be Determined, or TBD)

- The PM presented the BMC to the product team and to management. This helped create a shared understanding of the idea and its potential.

- A discussion followed. But unlike the discussions the company had held in the past that tried to guess at a high level whether an idea is good or not, this one was structured and more concrete. They reviewed the various parts of the BMC and discussed the answers to each part.

- A decision was made to park this idea.

This is exactly how models and frameworks are supposed to work. They help us reduce a complex reality into a set of important questions. Answering these questions drives a shared understanding which leads to concrete discussions, and better decisions. While the artifact is an important part of this process, it’s just a communication tool. The goal is never just to produce the artifact.

Join thousands of of product people who receive my newsletter to get articles like this (plus eBooks, templates and other resources) in your inbox.

Enter the Robots

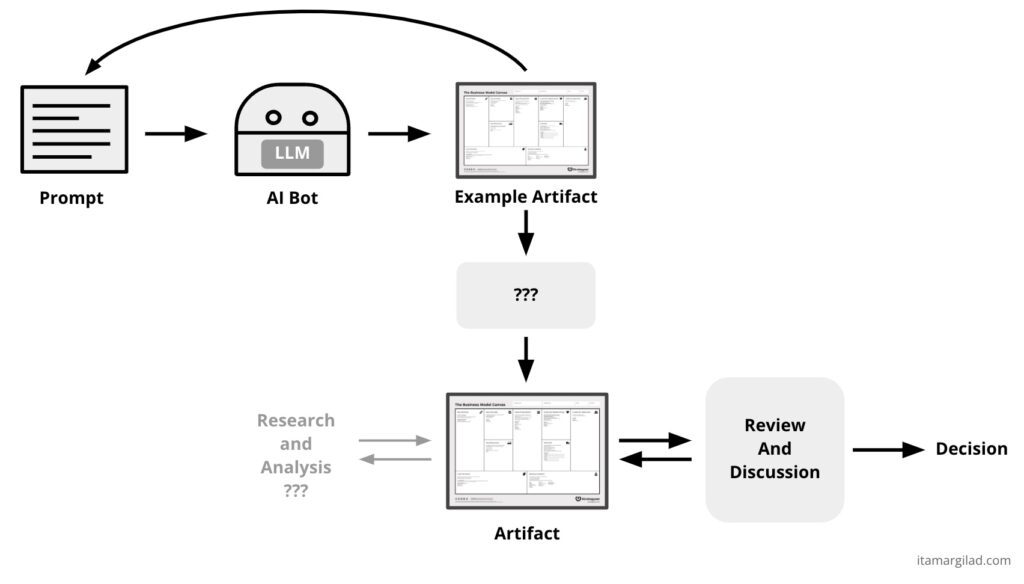

Now let’s imagine this same scenario with ChatGPT.

- The product manager starts by prompting the GenML robot to produce a BMC. To make the output useful he’ll need to feed the context of the company and of the users, as much as these things can be expressed in text, into the prompt. It usually takes multiple rounds of tweaking the prompt to get to a satisfactory result.

- The bot finally produces a Business Model Canvas.

- The bot-BMC is full of potentially useful content, but there are two interesting points to note: a) There are no TBDs, no Ifs, and no Maybes. It’s a definitive, complete, and confident artifact. Why? Because that’s what the current GenML text bots are designed to do — they give us the most convincing output they can produce, even if it’s not well-researched or even factually correct. They’re designed to win the Turing Test, not to deal with shades of gray. 2) It’s likely that the BMC will make the idea look good, simply because the bot has probably been trained on positive examples rather than negative ones. You can ask the bot to produce both pro and con BMCs, and it will happily comply, but will anyone do that? And which one should you use?

- The PM needs to translate the bot-BMC into an artifact the company will use. In the best-case scenario he’ll use it solely for inspiration and still do his own research and analysis and produce a fresh BMC. But will anyone do this cognitively-hard, time-consuming work when there’s already a ready-made artifact at hand (especially after all the time we invested in it)? I believe most of us will simply attempt to copy-and-paste from the bot-artifact. If we’re short on time, we’ll just tweak it and call it a day.

- The PM shares the BMC with the team and with management. We’re creating a shared understanding, but it’s heavily biased by what the bot produced, which is based on the average thing people tend to say about such questions on the Web, on Reddit, and elsewhere. Contrary to what you might think, this is a weak signal. Common wisdom may not apply to your particular case, and many good ideas defy conventional thinking.

- The BMC is reviewed. If the review is thorough, the weaknesses of the artifact will be flushed out and the PM will be asked to go back and redo. But there’s a risk, again, that instead of doing the cognitively-hard work of challenging the artifact, the leadership team will just buy the positive, polished message the BMC communicates.

- A decision is made

Obviously, no one is planning to delegate judgment to GenML systems (right?). We see them as a convenient shortcut, but we’ll still expect to think for ourselves. The problem is that the bots offer a compelling temptation. Research, analysis, estimation. review, and decision-making require what Psychologist Daniel Kahneman calls System-2 thinking —slow, effortful, intentional, and tiring contemplation. In the book Thinking Fast and Slow Kahneman explains that most of us avoid using our System-2 thinking when there are convenient shortcuts available, even if those lead us to the wrong answer. GenML just created a whole new class of mental shortcuts for us to fall back on.

In my Lean Product Management courses we practice using principles, frameworks and tools that bring modern product management thinking into any org.

Secure your ticket for the next public workshop or contact me to organize an in-house workshop for your team.

The Artifact Factory

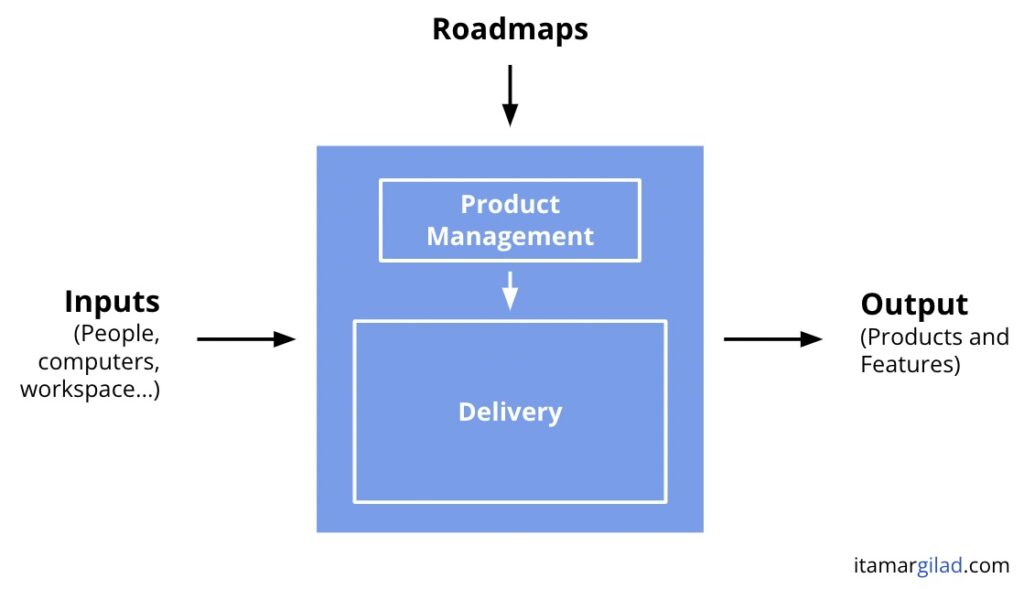

But there may be an even more pernicious aspect to these bot artifacts. In some organizations product managers (now sometimes called Product Owners) work in feature factories that are measured on rate of output. The PMs/POs themselves are seen as a sort of 1-person “artifact factory” producing an endless stream of backlog items and user stories to feed into Agile dev.

These product managers are under high pressure to produce, and I suspect the temptation to rely on the bots is going to be even higher in their case. Can a GenML system really produce useful ideas and requirements without a deep understanding of the product, the users, the market, and the company? I have my doubts, but I assume that’s what’s already happening.

Taking one logical step further, we can imagine outsourcing artifact production to external agencies as a cost-cutting measure. You can already find agencies that will design and product-manage your product for you, but now that the bots are here, these agencies will be able to offer much better per-artifact pricing, and produce much more (a bit like content farms do for websites). We might see a race to the bottom that greatly devalues real product management and design work (yes, design bots are coming too).

The Bots Aren’t The Problem

I’m not saying that GenML tools are necessarily bad. In fact I’m sure we will see many good use cases. Even the one I described — creating ready-made artifacts — may help companies adopt helpful models and processes, think more broadly, and improve the speed and quality of execution. The next few years will be very interesting for product management, and I’m optimistic that GenML will empower us to create better products quicker. Still, we shouldn’t underestimate the ability of people and organizations to misuse, overuse, and abuse tools and products.

As usual it’s not the technology, but how we use it, which is based on our beliefs and values. In some companies the artifact is seen as a way to collaborate and have meaningful discussions. In others it’s an important goal in its own right. In some organizations saying things that aren’t necessarily true in a compelling and polished way will get you fired; in others it’ll get you promoted to management. Similarly, in some companies GenML will elevate and empower people and teams, while in others it will likely amplify the problems the company is suffering from. For all their power, the bots cannot save us from ourselves.

Image source: DALL-E